이 글은 CentOS 8.2.2004 (Core) 버전의 깡통을 기준으로 root 계정에서 설치하는 과정을 설명합니다.

1. OS 사전 필수 설정

lock 파일 제거

/etc 경로에 .lock 파일이 있는 경우 설치가 원활하지 않을 수 있습니다.

rm -rf /etc/*.lock

hostname 변경

아래 명령에서 {host이름} 부분을 원하는 이름으로 변경합니다.

hostnamectl set-hostname {host이름}

swap off 설정

swap은 메모리가 부족하거나 절전 모드에서 디스크의 일부 공간을 메모리처럼 사용하는 기능입니다.

POD를 할당하고 제어하는 kubelet에서 swap 상황을 처리할 수 없으므로 영구적으로 off 시켜야 합니다.

# 즉시 swapoff 설정

swapoff -a

# 재부팅해도 적용되도록 영구적으로 off 설정

sudo sed -i.bak '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

ssh key 생성

RKE는 ssh를 사용하며 단일 또는 여러 host에 설치하여 노드를 쉽게 구성할 수 있습니다.

자세한 설명은

https://rancher.com/blog/2018/2018-09-26-setup-basic-kubernetes-cluster-with-ease-using-rke/

에서 확인할 수 있습니다.

RKE는 ssh를 사용하여 설치가 진행됩니다.

여기서는 단일 노드에 설치하는 설명이기 때문에 localhost로 접속하는 ssh key를 생성한다고 생각하시면 됩니다.

먼저 ssh key를 생성하기 위해 아래 명령어를 실행 후, 엔터를 3번 연속 입력합니다.

ssh-keygen

TIP

만약 bash script에서 사용자 입력을 받지 않으려면 아래와 같이 명령어를 사용할 수 있습니다.

ssh-keygen -t rsa -q -f /root/.ssh/id_rsa -N '' <<<y

localhost에 접속 시, 비밀번호 입력을 하지 않도록 authorized_keys 파일을 생성합니다.

cat /root/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

접속 테스트를 위해 아래의 명령어를 입력합니다.

( 제가 사용하는 host의 ip는 192.168.253.140이며, 각자의 ip로 변경하여 실행해 주셔야 합니다. )

ssh -q -o StrictHostKeyChecking=no root@192.168.253.140

접속이 되었으면 아래 그림과 같이 Last login: 이라는 글자가 보입니다.

마지막으로 터미널에서 exit 를 입력하여, localhost에 대한 ssh 접속을 종료합니다.

2. RKE 사전 필수 설정

RKE 문서의 OS 필수 항목 설정에 대해 나열하였습니다. ( rancher.com/docs/rke/latest/en/os/ )

kernel module load

RKE 문서에서 제공하는 모듈 확인 스크립트를 기반으로, 모듈 미 설치 시 설치하도록 수정하였습니다.

#!/bin/sh

for module in br_netfilter ip6_udp_tunnel ip_set ip_set_hash_ip ip_set_hash_net iptable_filter iptable_nat iptable_mangle iptable_raw nf_conntrack_netlink nf_conntrack nf_conntrack_ipv4 nf_defrag_ipv4 nf_nat nf_nat_ipv4 nf_nat_masquerade_ipv4 nfnetlink udp_tunnel veth vxlan x_tables xt_addrtype xt_conntrack xt_comment xt_mark xt_multiport xt_nat xt_recent xt_set xt_statistic xt_tcpudp; do

if ! lsmod | grep -q $module; then

echo "module $module is not present, try to install...";

modprobe $module

if [ $? -eq 0 ]; then

echo -e "\033[32;1mSuccessfully installed $module!\033[0m"

else

echo -e "\033[31;1mInstall $module failed!!!\033[0m"

fi

fi;

done

위 코드를 module.sh 파일로 생성 후, 아래와 같이 실행 권한을 주고 실행하면 됩니다.

chmod +x module.sh

./module.sh

중간에 nf_nat_masquerade_ipv4 모듈 로드가 실패하는데 아직 이유는 정확히 모르겠습니다.

설치하는데 문제는 없기 때문에 일단 무시하고 진행합니다.

bridge 설정

bridge 설정을 위해 아래 명령을 복사하여 실행합니다.

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

적용을 위해 아래 명령을 실행합니다.

sysctl --system

방화벽 port 열기

아래의 명령을 복사하여 실행합니다.

for i in 22 80 443 2376 2379 2380 6443 8472 9099 10250 10251 10252 10254 30000-32767; do

firewall-cmd --add-port=${i}/tcp --permanent

done

firewall-cmd --reload

이 외에도 CNI를 flannel로 사용할 것이기 때문에 8285/udp 포트를 추가로 설정합니다.

firewall-cmd --add-port=8285/udp --permanent

firewall-cmd --reload

3. 설치

설치는 간략하게 Docker 설치 -> RKE 다운로드 및 설정 -> RKE 설치 -> Kubernetes CLI를 위한 kubectl 설치 순서로 진행됩니다.

Docker 설치

CentOS 8에서는 dnf 패키지 매니저를 사용합니다.

아래 명령어로 docker의 repo 추가 및 설치를 진행합니다.

2020-12-25 현재 docker 20.10.1 버전이 stable package로 올라와 있지만 RKE에서는 19.03 까지만 지원하기 때문에 19.03의 마지막 stable 버전인 19.03.14-3.el8 버전을 명시적으로 지정하여 설치하겠습니다.

docker package 확인은 download.docker.com/linux/centos/8/x86_64/stable/Packages/ 에서 가능합니다.

dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

dnf install docker-ce-3:19.03.14-3.el8

docker 설치가 완료되면 서비스 시작을 해줘야 합니다.

아래 명령어를 차례대로 실행하여 docker를 시작하고 실행확인을 합니다.

# 데몬 Reload

systemctl daemon-reload

# docker 시작

systemctl start docker

# 시스템 부팅 시 docker를 시작하도록 설정

systemctl enable docker

# docker의 실행중인 모든 컨테이너 확인

docker ps -a

docker 서비스가 시작되면 iptables의 Chain FORWARD를 policy DROP으로 변경하기 때문에 ACCEPT로 변경합니다.

# 현재 iptables 리스트 확인

iptables -L

# FORWARD를 ACCEPT로 변경

iptables -P FORWARD ACCEPT

# ACCEPT로 변경된 것을 확인

iptables -L

마지막으로 방화벽에 docker0 인터페이스를 추가합니다.

firewall-cmd --permanent --zone=trusted --change-interface=docker0

firewall-cmd --reload

RKE 다운로드

현재 날짜 (2020-12-25) 기준 Pre-release를 제외한 정식 release는 1.2.3이며, github.com/rancher/rke/tags 에서 확인할 수 있습니다.

먼저 RKE 다운로드 및 실행을 위한 설정을 진행합니다.

# wget 설치

dnf install wget -y

# 다운로드

wget https://github.com/rancher/rke/releases/download/v1.2.3/rke_linux-amd64

# 이름 변경

mv rke_linux-amd64 rke

# 실행권한 추가

chmod +x rke

# 어디에서나 사용 가능하도록 유저 디렉토리로 이동

mv rke /usr/bin/rke

RKE 설정

아래 명령어를 사용해 RKE 바이너리로부터 cluster.yml 파일을 먼저 생성합니다.

rke config

각 항목에 맞는 값들을 입력해야 하며, 해당 항목은 아래와 같습니다.

# 클러스터 레벨의 SSH Private Key의 경로 설정 ( 기본 값 엔터 입력 )

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]:

# RKE를 설치할 호스트 수 ( 단일로 구성하기 때문에 기본 값 엔터 입력 )

[+] Number of Hosts [1]:

# 호스트의 SSH Address ( local 에서 설치하기 때문에 local ip를 입력 )

[+] SSH Address of host (1) [none]: 192.168.253.140

# 호스트의 SSH 접속 포트 ( 기본 값 엔터 입력 )

[+] SSH Port of host (1) [22]:

# 호스트의 SSH Private Key 경로

[+] SSH Private Key Path of host (192.168.253.140) [none]: ~/.ssh/id_rsa

# 호스트의 SSH 사용자 ( root 입력 )

[+] SSH User of host (192.168.253.140) [ubuntu]: root

# 호스트가 Control Plane 역할을 할 것인가? ( 기본 값 엔터 입력 )

[+] Is host (192.168.253.140) a Control Plane host (y/n)? [y]:

# 호스트가 Work 역할을 할 것인가? ( y 입력 후 엔터 입력 )

[+] Is host (192.168.253.140) a Worker host (y/n)? [n]: y

# 호스트가 etcd 역할을 할 것인가? ( y 입력 후 엔터 입력 )

[+] Is host (192.168.253.140) an etcd host (y/n)? [n]: y

# 기본 값 엔터 입력

[+] Override Hostname of host (192.168.253.140) [none]:

# 호스트 SSH Address와 같은 값 입력

[+] Internal IP of host (192.168.253.140) [none]: 192.168.253.140

# 기본 값 엔터 입력

[+] Docker socket path on host (192.168.253.140) [/var/run/docker.sock]:

# Container Network Interface 설정 ( flannel 입력 )

[+] Network Plugin Type (flannel, calico, weave, canal) [canal]: flannel

# 기본 값 엔터 입력

[+] Authentication Strategy [x509]:

# 기본 값 엔터 입력

[+] Authorization Mode (rbac, none) [rbac]:

# 기본 값 엔터 입력

[+] Kubernetes Docker image [rancher/hyperkube:v1.18.3-rancher2]:

# 클러스터 도메인 ( 필요한 경우 도메인 변경, 여기서는 기본 값 엔터 입력 )

[+] Cluster domain [cluster.local]:

# 기본 값 엔터 입력

[+] Service Cluster IP Range [10.43.0.0/16]:

# 기본 값 엔터 입력

[+] Enable PodSecurityPolicy [n]:

# flannel 사용 시, 10.244.0.0/16 으로 설정해야 한다.

[+] Cluster Network CIDR [10.42.0.0/16]: 10.244.0.0/16

# 기본 값 엔터 입력

[+] Cluster DNS Service IP [10.43.0.10]:

# 기본 값 엔터 입력

[+] Add addon manifest URLs or YAML files [no]:

입력을 완료하면 현재 경로에 cluster.yml 파일이 생성됩니다.

cluster.yml을 노드 삭제 또는 수정 시에도 사용하므로 잘 보관하고 계셔야 합니다.

RKE 설치

아래 명령어는 cluster.yml을 별도로 지정하지 않아도 자동으로 현재 위치의 cluster.yml을 사용하도록 되어있습니다.

rke up

만약 cluster.yml을 명시적으로 지정하고 싶은 경우 아래 명령어를 사용합니다. ( 예시로 /tml/cluster.yml을 지정 )

rke up --config /tmp/cluster.yml

설치가 진행이 되면 아래와 같은 로그를 확인할 수 있습니다.

INFO[0000] Running RKE version: v1.2.3

INFO[0000] Initiating Kubernetes cluster

INFO[0000] [dialer] Setup tunnel for host [192.168.253.140]

INFO[0000] Checking if container [cluster-state-deployer] is running on host [192.168.253.140], try #1

INFO[0000] Pulling image [rancher/rke-tools:v0.1.66] on host [192.168.253.140], try #1

INFO[0011] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0011] Starting container [cluster-state-deployer] on host [192.168.253.140], try #1

INFO[0012] [state] Successfully started [cluster-state-deployer] container on host [192.168.253.140]

INFO[0012] [certificates] Generating CA kubernetes certificates

INFO[0012] [certificates] Generating Kubernetes API server aggregation layer requestheader client CA certificates

INFO[0012] [certificates] GenerateServingCertificate is disabled, checking if there are unused kubelet certificates

INFO[0012] [certificates] Generating Kubernetes API server certificates

INFO[0012] [certificates] Generating Service account token key

INFO[0012] [certificates] Generating Kube Controller certificates

INFO[0012] [certificates] Generating Kube Scheduler certificates

INFO[0012] [certificates] Generating Kube Proxy certificates

INFO[0012] [certificates] Generating Node certificate

INFO[0012] [certificates] Generating admin certificates and kubeconfig

INFO[0012] [certificates] Generating Kubernetes API server proxy client certificates

INFO[0013] [certificates] Generating kube-etcd-192-168-253-140 certificate and key

INFO[0013] Successfully Deployed state file at [./cluster.rkestate]

INFO[0013] Building Kubernetes cluster

INFO[0013] [dialer] Setup tunnel for host [192.168.253.140]

INFO[0013] [network] Deploying port listener containers

INFO[0013] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0013] Starting container [rke-etcd-port-listener] on host [192.168.253.140], try #1

INFO[0013] [network] Successfully started [rke-etcd-port-listener] container on host [192.168.253.140]

INFO[0013] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0013] Starting container [rke-cp-port-listener] on host [192.168.253.140], try #1

INFO[0014] [network] Successfully started [rke-cp-port-listener] container on host [192.168.253.140]

INFO[0014] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.253.140], try #1

INFO[0014] [network] Successfully started [rke-worker-port-listener] container on host [192.168.253.140]

INFO[0014] [network] Port listener containers deployed successfully

INFO[0014] [network] Running control plane -> etcd port checks

INFO[0014] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0014] Starting container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0014] [network] Successfully started [rke-port-checker] container on host [192.168.253.140]

INFO[0014] Removing container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0014] [network] Running control plane -> worker port checks

INFO[0014] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0014] Starting container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0015] [network] Successfully started [rke-port-checker] container on host [192.168.253.140]

INFO[0015] Removing container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0015] [network] Running workers -> control plane port checks

INFO[0015] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0015] Starting container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0015] [network] Successfully started [rke-port-checker] container on host [192.168.253.140]

INFO[0015] Removing container [rke-port-checker] on host [192.168.253.140], try #1

INFO[0015] [network] Checking KubeAPI port Control Plane hosts

INFO[0015] [network] Removing port listener containers

INFO[0015] Removing container [rke-etcd-port-listener] on host [192.168.253.140], try #1

INFO[0015] [remove/rke-etcd-port-listener] Successfully removed container on host [192.168.253.140]

INFO[0015] Removing container [rke-cp-port-listener] on host [192.168.253.140], try #1

INFO[0015] [remove/rke-cp-port-listener] Successfully removed container on host [192.168.253.140]

INFO[0015] Removing container [rke-worker-port-listener] on host [192.168.253.140], try #1

INFO[0016] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.253.140]

INFO[0016] [network] Port listener containers removed successfully

INFO[0016] [certificates] Deploying kubernetes certificates to Cluster nodes

INFO[0016] Checking if container [cert-deployer] is running on host [192.168.253.140], try #1

INFO[0016] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0016] Starting container [cert-deployer] on host [192.168.253.140], try #1

INFO[0016] Checking if container [cert-deployer] is running on host [192.168.253.140], try #1

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.253.140], try #1

INFO[0021] Removing container [cert-deployer] on host [192.168.253.140], try #1

INFO[0021] [reconcile] Rebuilding and updating local kube config

INFO[0021] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]

INFO[0021] [certificates] Successfully deployed kubernetes certificates to Cluster nodes

INFO[0021] [file-deploy] Deploying file [/etc/kubernetes/audit-policy.yaml] to node [192.168.253.140]

INFO[0021] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0021] Starting container [file-deployer] on host [192.168.253.140], try #1

INFO[0021] Successfully started [file-deployer] container on host [192.168.253.140]

INFO[0021] Waiting for [file-deployer] container to exit on host [192.168.253.140]

INFO[0021] Waiting for [file-deployer] container to exit on host [192.168.253.140]

INFO[0021] Container [file-deployer] is still running on host [192.168.253.140]: stderr: [], stdout: []

INFO[0022] Waiting for [file-deployer] container to exit on host [192.168.253.140]

INFO[0022] Removing container [file-deployer] on host [192.168.253.140], try #1

INFO[0022] [remove/file-deployer] Successfully removed container on host [192.168.253.140]

INFO[0022] [/etc/kubernetes/audit-policy.yaml] Successfully deployed audit policy file to Cluster control nodes

INFO[0022] [reconcile] Reconciling cluster state

INFO[0022] [reconcile] This is newly generated cluster

INFO[0022] Pre-pulling kubernetes images

INFO[0022] Pulling image [rancher/hyperkube:v1.19.4-rancher1] on host [192.168.253.140], try #1

INFO[0046] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0046] Kubernetes images pulled successfully

INFO[0046] [etcd] Building up etcd plane..

INFO[0046] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0047] Starting container [etcd-fix-perm] on host [192.168.253.140], try #1

INFO[0047] Successfully started [etcd-fix-perm] container on host [192.168.253.140]

INFO[0047] Waiting for [etcd-fix-perm] container to exit on host [192.168.253.140]

INFO[0047] Waiting for [etcd-fix-perm] container to exit on host [192.168.253.140]

INFO[0047] Container [etcd-fix-perm] is still running on host [192.168.253.140]: stderr: [], stdout: []

INFO[0048] Waiting for [etcd-fix-perm] container to exit on host [192.168.253.140]

INFO[0048] Removing container [etcd-fix-perm] on host [192.168.253.140], try #1

INFO[0048] [remove/etcd-fix-perm] Successfully removed container on host [192.168.253.140]

INFO[0048] Pulling image [rancher/coreos-etcd:v3.4.13-rancher1] on host [192.168.253.140], try #1

INFO[0055] Image [rancher/coreos-etcd:v3.4.13-rancher1] exists on host [192.168.253.140]

INFO[0055] Starting container [etcd] on host [192.168.253.140], try #1

INFO[0056] [etcd] Successfully started [etcd] container on host [192.168.253.140]

INFO[0056] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.253.140]

INFO[0056] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0056] Starting container [etcd-rolling-snapshots] on host [192.168.253.140], try #1

INFO[0056] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.253.140]

INFO[0061] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0061] Starting container [rke-bundle-cert] on host [192.168.253.140], try #1

INFO[0061] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.253.140]

INFO[0061] Waiting for [rke-bundle-cert] container to exit on host [192.168.253.140]

INFO[0061] Container [rke-bundle-cert] is still running on host [192.168.253.140]: stderr: [], stdout: []

INFO[0062] Waiting for [rke-bundle-cert] container to exit on host [192.168.253.140]

INFO[0062] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.253.140]

INFO[0062] Removing container [rke-bundle-cert] on host [192.168.253.140], try #1

INFO[0062] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0062] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0062] [etcd] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0062] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0062] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0062] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0063] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0063] [etcd] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0063] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0063] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0063] [etcd] Successfully started etcd plane.. Checking etcd cluster health

INFO[0063] [etcd] etcd host [192.168.253.140] reported healthy=true

INFO[0063] [controlplane] Building up Controller Plane..

INFO[0063] Checking if container [service-sidekick] is running on host [192.168.253.140], try #1

INFO[0063] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0063] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0063] Starting container [kube-apiserver] on host [192.168.253.140], try #1

INFO[0063] [controlplane] Successfully started [kube-apiserver] container on host [192.168.253.140]

INFO[0063] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.253.140]

INFO[0070] [healthcheck] service [kube-apiserver] on host [192.168.253.140] is healthy

INFO[0070] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0070] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0071] [controlplane] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0071] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0071] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0071] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0071] Starting container [kube-controller-manager] on host [192.168.253.140], try #1

INFO[0071] [controlplane] Successfully started [kube-controller-manager] container on host [192.168.253.140]

INFO[0071] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.253.140]

INFO[0076] [healthcheck] service [kube-controller-manager] on host [192.168.253.140] is healthy

INFO[0076] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0076] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0077] [controlplane] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0077] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0077] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0077] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0077] Starting container [kube-scheduler] on host [192.168.253.140], try #1

INFO[0077] [controlplane] Successfully started [kube-scheduler] container on host [192.168.253.140]

INFO[0077] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.253.140]

INFO[0082] [healthcheck] service [kube-scheduler] on host [192.168.253.140] is healthy

INFO[0082] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0082] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0082] [controlplane] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0082] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0083] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0083] [controlplane] Successfully started Controller Plane..

INFO[0083] [authz] Creating rke-job-deployer ServiceAccount

INFO[0083] [authz] rke-job-deployer ServiceAccount created successfully

INFO[0083] [authz] Creating system:node ClusterRoleBinding

INFO[0083] [authz] system:node ClusterRoleBinding created successfully

INFO[0083] [authz] Creating kube-apiserver proxy ClusterRole and ClusterRoleBinding

INFO[0083] [authz] kube-apiserver proxy ClusterRole and ClusterRoleBinding created successfully

INFO[0083] Successfully Deployed state file at [./cluster.rkestate]

INFO[0083] [state] Saving full cluster state to Kubernetes

INFO[0083] [state] Successfully Saved full cluster state to Kubernetes ConfigMap: full-cluster-state

INFO[0083] [worker] Building up Worker Plane..

INFO[0083] Checking if container [service-sidekick] is running on host [192.168.253.140], try #1

INFO[0083] [sidekick] Sidekick container already created on host [192.168.253.140]

INFO[0083] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0083] Starting container [kubelet] on host [192.168.253.140], try #1

INFO[0083] [worker] Successfully started [kubelet] container on host [192.168.253.140]

INFO[0083] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.253.140]

INFO[0088] [healthcheck] service [kubelet] on host [192.168.253.140] is healthy

INFO[0088] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0088] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0088] [worker] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0088] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0088] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0088] Image [rancher/hyperkube:v1.19.4-rancher1] exists on host [192.168.253.140]

INFO[0089] Starting container [kube-proxy] on host [192.168.253.140], try #1

INFO[0089] [worker] Successfully started [kube-proxy] container on host [192.168.253.140]

INFO[0089] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.253.140]

INFO[0094] [healthcheck] service [kube-proxy] on host [192.168.253.140] is healthy

INFO[0094] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0094] Starting container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0094] [worker] Successfully started [rke-log-linker] container on host [192.168.253.140]

INFO[0094] Removing container [rke-log-linker] on host [192.168.253.140], try #1

INFO[0094] [remove/rke-log-linker] Successfully removed container on host [192.168.253.140]

INFO[0094] [worker] Successfully started Worker Plane..

INFO[0094] Image [rancher/rke-tools:v0.1.66] exists on host [192.168.253.140]

INFO[0094] Starting container [rke-log-cleaner] on host [192.168.253.140], try #1

INFO[0095] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.253.140]

INFO[0095] Removing container [rke-log-cleaner] on host [192.168.253.140], try #1

INFO[0095] [remove/rke-log-cleaner] Successfully removed container on host [192.168.253.140]

INFO[0095] [sync] Syncing nodes Labels and Taints

INFO[0095] [sync] Successfully synced nodes Labels and Taints

INFO[0095] [network] Setting up network plugin: flannel

INFO[0095] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0095] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0095] [addons] Executing deploy job rke-network-plugin

INFO[0105] [addons] Setting up coredns

INFO[0105] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0105] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0105] [addons] Executing deploy job rke-coredns-addon

INFO[0110] [addons] CoreDNS deployed successfully

INFO[0110] [dns] DNS provider coredns deployed successfully

INFO[0110] [addons] Setting up Metrics Server

INFO[0110] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0110] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0110] [addons] Executing deploy job rke-metrics-addon

INFO[0115] [addons] Metrics Server deployed successfully

INFO[0115] [ingress] Setting up nginx ingress controller

INFO[0115] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0115] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0115] [addons] Executing deploy job rke-ingress-controller

INFO[0120] [ingress] ingress controller nginx deployed successfully

INFO[0120] [addons] Setting up user addons

INFO[0120] [addons] no user addons defined

INFO[0120] Finished building Kubernetes cluster successfully

설치가 완료되면 kube_config_cluster.yml이 생성됩니다.

이 파일은 Kubernetes config 파일이며 kubectl 사용 시 사용됩니다.

kubectl 설치

Kubernetes CLI를 사용하기 위해서 kubectl 다운로드 및 실행을 위한 설정을 진행합니다.

# 다운로드

curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl

# 실행권한 추가

chmod +x kubectl

# 어디에서나 사용 가능하도록 유저 디렉토리로 이동

mv kubectl /usr/bin/kubectl

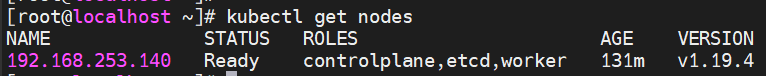

kube_config_cluster.yml을 사용하여 node를 확인해 보겠습니다.

kubectl --kubeconfig kube_config_cluster.yml get nodes

아래와 같이 controlplane, etcd, worker의 role을 가진 단일 노드가 설치된 모습을 볼 수 있습니다.

kubectl 명령어 실행 시, 매번 kube_config_cluster.yml을 지정하기는 번거롭기 때문에 아래의 명령어를 실행합니다.

sudo mkdir -p $HOME/.kube

sudo cp kube_config_cluster.yml $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

이후부터는 아래와 같이 kube_config_cluster.yml을 생략할 수 있습니다.

기본으로 설치되어 있는 POD들은 아래와 같습니다.

이상 BareMetal에 RKE를 설치하는 과정을 설명하였으며, 다음 포스팅은 LoadBalancer인 MetalLB를 설치하는 과정을 설명하겠습니다.

BareMetal에 설치된 Kubernetes에 LoadBalancer (MetalLB) 설치하기

BareMetal에 설치된 Kubernetes에 LoadBalancer (MetalLB) 설치하기

2020/12/25 - [개발/Kubernetes] - BareMetal에 RKE를 사용하여 Kubernetes 단일노드 설치 BareMetal에 RKE를 사용하여 Kubernetes 단일노드 설치 이 글은 CentOS 8.2.2004 (Core) 버전의 깡통을 기준으로 root 계..

log4cat.tistory.com

'개발 > Kubernetes' 카테고리의 다른 글

| BareMetal에 설치된 Kubernetes에 LoadBalancer (MetalLB) 설치하기 (0) | 2020.12.25 |

|---|

최근댓글